11-minute read

Quick summary: Cloud analytics is helping utilities address their most pressing challenges in regulatory compliance, digital transformation, asset management, and customer service.

IT organizations are moving to the cloud faster than ever. According to forecasts by Gartner, 85 percent of businesses will be embracing a cloud-first principle for their IT strategy by 2025. Adopting cloud into an organization’s IT strategy opens an opportunity to harness the value that the cloud has to offer. One area where migration to the cloud makes more and more sense is in the analytics space: migrating analytics infrastructures and processing to the cloud.

Most of the models built by data scientists don’t end up in production. In a study by Rexter Analytics, only 13 percent of data scientists said that their models make it to production. This gap often arises due to the immaturity and inability of IT to support these processes on-premise or in the cloud. Cloud and infrastructure-as-code providers create the opportunity for analytics teams to quickly deploy and test their models in production environments.

This issue is becoming more apparent in the utilities space, as utility companies often operate with a higher degree of regulation—and stricter IT standards—than those in other industries. These compliance requirements can often pose a unique challenge for IT leaders in the utility industry. However, IT also has an opportunity to leverage the cloud in supporting strategies that address these and other challenges.

Article continues below.

We will never sell your data. View our privacy policy here.

The cloud advantage

Digital transformation has turned utility providers into software companies. They are required to react faster to changing customer needs but, on the other hand, also deliver stable services to their customers. In addition, they must make fast decisions based on the immense amount of data they have gathered and, as a highly regulated industry, must be able to explain decisions they make based on that data. DevSecOps and analytics teams constantly need to improve themselves and communicate efficiently to achieve their organization’s goals.

Traditional approaches to developing IT infrastructure involved long lead times for IT to build solutions to respond to business needs. Building these solutions required requests to procurement to secure the underlying hardware, software, and networking for the systems to run on. In addition, decisions to scale up or down can demand weeks or months of lead time, slowing down the team’s capacity to experiment and iterate, hindering innovation, and reducing the value that IT can provide in terms of analytics solutions.

With adoption of the public cloud, the time required for hardware provisioning is reduced from months to minutes, allowing developers to quickly provision and de-provision resources at the click of a button and allowing companies to create a culture of innovation and scale. In addition, cloud migrations add value beyond pure “lift and shift” approaches—moving applications from on-premise into the cloud. Cloud providers offer platforms to scale compute and data operations on demand and a large suite of integrations that can allow organizations to build scalable architectures to serve their data, ETL, and ML training and inference processes.

With the adoption of the public cloud, the time required for hardware provisioning is reduced from months to minutes.

Challenges for utility companies

The utility sector faces some specific challenges that need to be managed to meet regulatory standards and respond to the needs of the business.

Cloud analytics for regulatory compliance

As part of the machine learning model lifecycle, transparency of process is paramount both for internal reviews by data science boards and for external auditors. In working with our utility clients, a key emphasis is made on model insight, traceability, and data auditing. Here are just a few of the ways cloud analytics solutions can provide support:

- Model training data and inference codes are versioned, storing model evaluation metadata to a centralized service.

- Model training and inference solutions are explainable and evaluated for bias. Decisions made based on utility models can affect thousands or even millions of customers. Furthermore, utilities are usually required to provide reasons for their decisions to regulatory boards on the state level.

- Training datasets are versioned for model tracking and training reproducibility and are managed according to data governance standards.

- Model drift is tracked and reported on. Based on model requirements, retraining triggers are incorporated to provide assurance that models are current with latest training data.

By focusing on model transparency, our clients discovered the ability to audit their processes better than ever before, driving a fundamental shift from reactive to proactive processes in determining how to defend their decisions. This shift enables them to invest in innovation for setting up more MLOps processes to manage model lifecycle and inference infrastructure.

As part of the machine learning model lifecycle, transparency of process is paramount both for internal reviews by data science boards and for external auditors.

Cloud analytics for digital transformation

In larger corporations, data can often be siloed within the groups that create it. As part of this dispersion of data, data consumers often have difficulty finding and gaining access to data. In addition, data can be hosted on a variety of database types, making interfacing difficult and becoming a time sink for consumer teams when they could be producing meaningful analytics. For example, consumer teams might connect to on-premise development databases to avoid going through the process of getting production database access, then misuse development data as production data when building data products.

Cloud platforms can help remedy this by providing consistent mechanisms for sharing and consuming data across teams. On the security side, defining identity and resource-based access controls allows not only the ability to create fine-grained access controls, but also the flexibility to consistently grant data access requests across the enterprise. When building data science models, data scientists often want to bring in a wide variety of features initially, then conduct feature selection processes to pare down features to a specific subset. Making datasets more widely available improves data scientists’ ability to create more valuable models.

Cloud analytics for asset and work management

Utility companies have a large array of assets in the field and are dealing with a finite workforce. Increasing the use of sensor data tracking systems and incorporating them into machine learning systems improves the business’ ability to access and analyze the data and to optimize operational work. This can take a wide variety of forms, such as asset predictive failure models or anomaly detection models that can help pinpoint unforeseen issues in the larger system that need to be addressed.

IOT devices are common in utility industries and are usually part of real-time data and analytics solutions. These devices provide high-resolution data that can help utility companies perform pre-emptive maintenance on their infrastructure—or trigger notifications to customers that they are actively working to fix an issue. This results in greater customer satisfaction and other long-term benefits. Cloud providers have specialized solutions to connect and leverage data streams from IOT devices, derive insights from them, and make data-driven decisions quickly. They are equipped with the capacity to run data analytics tools on streaming data and are connected to business intelligence (BI) tools for decision makers.

Utilities are in a highly regulated industry in which the workforce is highly distributed across sites, with most of their time spent away from the office. Cloud providers offer services that enable workforces to work from anywhere while complying with inbuilt security and authenticated through identity management solutions. In addition, alerts and logging systems are available in clouds to monitor, prevent, log, and report malicious activities by users within and outside of the company.

One of the challenges utility companies are grappling with is that 50 percent of utility workforce will retire in the next decade or so. This could mean that many experts need to integrate their knowledge in augmented reality (AR)-enabled solutions and enable their organizations to capture this knowledge in a central knowledge network. This knowledge then can be used to train future generations of workers. Cloud providers offer various tools for creating high-quality virtual reality (VR), AR, and 3D applications easily without requiring any programming expertise. This helps companies save money and time in achieving their knowledge transfer goals and creates more use cases of AR application in utilities industry.

Approximately 50 percent of the utility workforce is expected to retire in the next five to ten years.

Cloud analytics for customer service

In addition to their asset management responsibilities, utility companies also have a customer-facing side. Customer support is a large part of the business, whether it takes the form of responding to customers on billing information or working with building developers to connect new utility infrastructures to new constructions. Leveraging cloud technologies not only delivers the ability to build scalable cloud-native applications that can service the customer, but also enables the company to tap into technology that can create managed customer-focused products, such as a connected-customer chatbot built with the AWS Lex framework.

Cloud analytics for uncovering competitive advantages

Cloud adoption for analytics can increase the scalability of analytics within the organization, the accessibility of data across teams, and the velocity with which the business can create analytics that enable it to do more with data. Analytics can also enable IT to promote the mission of the business more efficiently and effectively.

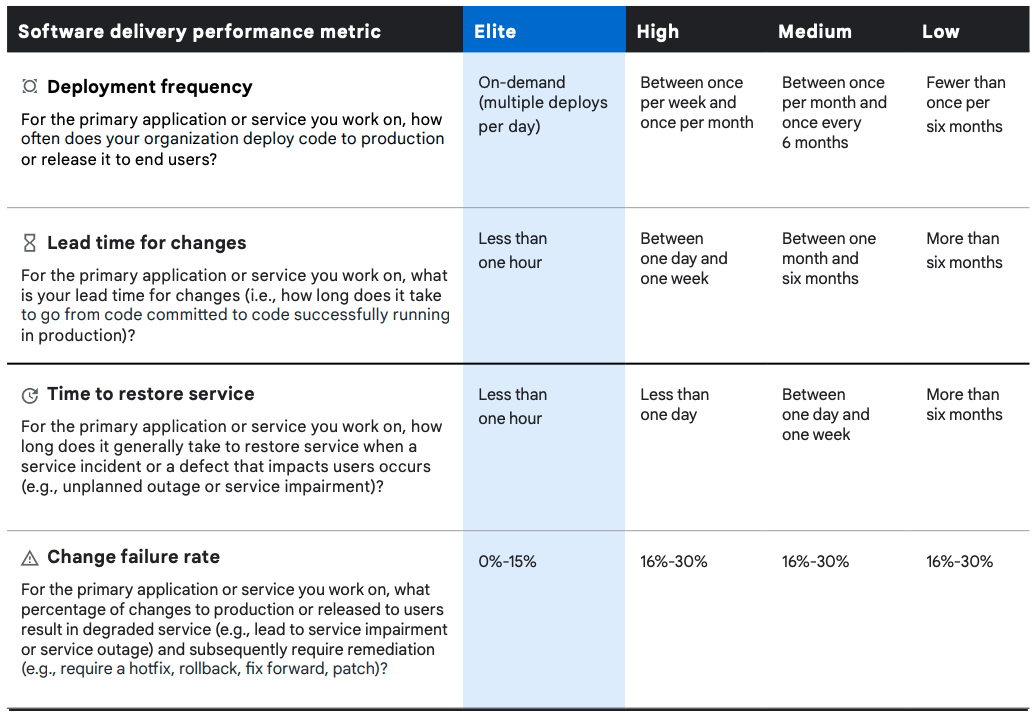

The DevOps Research and Assessment (DORA) team at Google Cloud conducted research over seven years and gathered data from more than 32,000 professionals across the globe. In their study (Accelerate State of DevOps), Google uses four measures to evaluate Software Delivery and Operational (SDO) performance, with a focus on system-level outcomes:

Throughput measures such as lead time for code changes and deployment frequency, stability measures such as time to restore service, and change failure rate can be used to quantify delivery performance, which impacts how quickly and reliably organizations can make data-driven decisions backed by explainable machine learning.

Ever-changing industries like utilities must deliver and operate software solutions quickly and reliably to address challenges they are facing with climate-change consequences such as wildfires. The faster their analytics teams can improve models, run experiments, and receive feedback, the sooner they can mitigate the risk with actions such as pre-emptive maintenance and public safety power shut-offs (PSPS). This could save companies millions of dollars in mitigating catastrophic events such as wildfires. Using the cloud can foster an experimentation culture among analytics teams, enable innovations in both the short and long term, and empower teams to deliver faster contributions.

Utility companies fall into one of the four performance groups shown in Figure 1 (Elite, High, Medium, Low). Groups with Elite performance can deploy their machine learning models on demand and perform multiple deployments per day. This resonates with what utility companies seek in long term: data-driven decisions backed by cutting-edge machine learning models that use data sources such as weather data, drone data, and field data, which could have very high resolution in time and space.

Utility companies also have thousands of customers whom they need to retain and communicate with. Thus, stability metrics are of great importance. Minimizing time to restore service and change failure rates results in greater customer satisfaction and other long-term benefits for the company.

Using the cloud can facilitate efforts by utility companies to achieve Elite performance. An increasing number of companies in the Elite category have adopted hybrid and multi-cloud, with significant impact on the business outcomes that companies care about. Accelerated State of DevOps showed that respondents who use hybrid or multi-cloud were 1.6 times more likely to exceed their organizational performance targets than those who did not. Moreover, they were 1.4 times more likely to excel in terms of deployment frequency, lead time changes, time to recover, change failure rate, and reliability.

Companies that have adopted multi-cloud for their products usually seek to leverage the unique benefits of each cloud provider to safeguard themselves from solely relying on the availability of one provider. Other factors such as legal compliance, lack of trust in any one provider, and disaster recovery lead companies to leverage multiple public cloud providers.

In addition to pure performance targets, the cost of inaction should be weighed. Failures for utility companies can often be catastrophic—for example, ignition of a billion-dollar wildfire. Enabling analytics in the cloud can help the right results reach the right decisions makers faster to help inform decisions around PSPS and prevent these catastrophic events.

Utility companies today are facing significant business problems such as increased demand, threats due to climate change, and aging infrastructures. Companies need to be competitive and innovative in their approach to solving these problems and to do more with less. Cloud technologies can help utilities produce solutions faster, but in parallel, these investments need leadership buy-in. IT leadership needs to assess how they are facing challenges with their current analytics approaches and determine whether a migration would improve the value of their data and processes and help them meet their business goals.

Case study: using machine learning to mitigate wildfire risks

Logic 20/20 has been actively working with a team of data scientists in a large West Coast utility company. The team has developed cutting-edge machine learning models to help mitigate wildfire risks. The client’s lead time and deployment frequency for developed models had significant room for improvement. Logic20/20 enabled the team to migrate their databases and models into AWS. Models were adapted to leverage serverless technology to run their models into production 96 percent faster. In addition, by providing comprehensive documentation, we enabled new team members to be easily onboarded and to start contributing to their teams.

On the operational side, Logic 20/20 has designed and implemented multi-environment MLOps architectures while leveraging existing templates for infrastructure and code across the organization. Our utility customers integrate several security checks, approval gates, and production checklists into their templates. Thus, by using these templates, we have been complying with all checks. The culture of experimentation enables data scientists to see their models in different environments, monitor behaviors, and set up triggers to notify them if metrics are not meeting their standards.

We have worked closely with our utility clients to understand the unique needs of their business and build tailored solutions to meet those needs. Logic20/20 brings a rich experience working with analytics, partnering closely with businesses to unite technology with the people and processes around it to help create value for the organization.

Powering a sustainable tomorrow

We partner with utilities to help them build a more resilient grid and move towards a cleaner, brighter future through

- Smart automation

- Asset image analytics

- DERMS implementation

- Analytics & predictive insights

- Cloud optimization

Alexander Johnson is a senior data science and machine learning engineer in Logic20/20’s Advanced Analytics practice.

Payam Kavousi Ghahfarokhi is a senior data science and machine learning engineer in Logic20/20’s Advanced Analytics practice.