For organizations intent on increasing analytics ROI and making the most of Big Data, it’s important to optimize data science pipelines.

Data models and analytics pipelines can range in value from low (monetary loss due to development issues) to high (large resulting revenue). Since analytics models are worth nothing just sitting on a shelf, it can be tempting to deploy a model that is only mediocre. However, deploying an incomplete or poorly constructed model is a bad choice in the long run, since doing so costs time and money and will likely result in lower revenue.

What is a data science pipeline made of? What is involved in creating a pipeline?

A data science pipeline involves analytics and modelling methods stored in a variety of programs. In effective pipelines, information often traverses three to six different types of software/environments. For a pipeline to be a worthy investment, it must be deployed quickly and used properly.

While the deployment process is getting easier, there are still many less-than-ideal strategies and technological hurdles to a rapid, smooth deployment. Organizations should be wary of one-size-fits-all solutions because they inevitably cover up substantial recurring costs and are often very difficult to migrate from.

What does an optimal pipeline deployment require?

Overall, the goals for deployment of pipelines are these, in order of priority:

- 1. Reduce speed of deployment. Get fast results to generate revenue and recoup development costs.

- 2. Keep development costs below returns. Improve the model for the greatest return.

- 3. Increase scale of use. Expand the effect of model by making it portable, leading to reusability in other sectors of the business.

So what stands in the way of achieving these goals? Let’s dive in.

What is the fundamental problem that slows down software deployment?

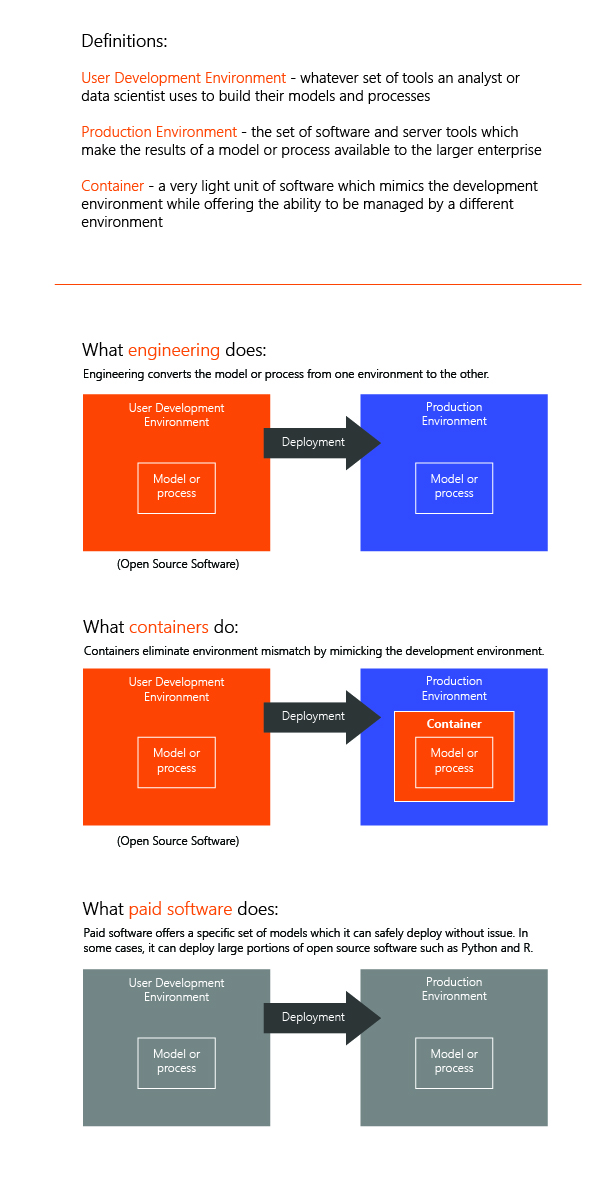

Software development can be a slow process because of one thing: the differences between development and deployment environments. Let’s look more closely at how these two stages differ:

Development needs a fast, flexible environment which allows rapid iteration and prototyping. Let’s look at each of these aspects more closely:

- 1. Fast. Computing speed literally translates to the speed which analysts and data scientists may do their work.

- 2. Flexible. Need access to open source software and ability to add and install software as needed.

- 3. Rapid iteration. Analysts and data scientists need to have a full stack of software which allows them to test, build, query, design models or graphics and then build them into report.

- 4. Prototyping. Data scientists especially need the capability to simulate the true production data stream so that they may prototype the performance of their model in the wild.

Deployment needs a highly scalable, responsive environment which has extensive logging and error handling. Let’s look at each of these aspects more closely:

- 1. Highly scalable. The solution needs to meet the expected demand, with enough room to grow.

- 2. Responsive. Depending on the needs of the end user, the deployment solution needs to be fast, either through batch-more preprocessing or through a high-speed implementation of the model through recoding or optimization of the pipeline.

- 3. Extensive logging. Very little about development of models or analytic pipelines suggests that logging is necessary, but when a process is elevated to a production level, it needs as much logging as is reasonable. The core principal of logging is that you record as much as possible about the process you are running so that you may trouble shoot it and support it with speed and efficiency.

- 4. Error handling. This refers to the ability to gracefully handle many concurrent situations such as missing values from an input data frame, poorly formed fields, or out of range values. Whatever the edge case might be, robust production grade code which deploys analytic or data science pipelines needs to be able to handle it without breaking.

How data is handled: Batch mode vs. real-time processing

It’s also necessary to think about how incoming data is processed in your pipeline. There are two strategies for handling data: batch mode storage or real-time storage. Batch mode involves storing your results while real-time involves calculating results just in time. Deciding between these two strategies is a big decision, since each produces a different speed and currency of your results. Stated differently, there is a critical decision between:

- 1. Processing the data in chunks (batch processing)

- 2. Processing the data as it comes in (real-time processing)

Let’s compare the two.

Batch Mode

Pros

- Easier from a technological standpoint

- Wide range of architectures with high-speed databases

- Well-suited for complex models with high performance

Cons

- Information is not kept as up-to-date as real-time processing

Real-Time

Pros

- More versatile with a longer lifecycle

- Mimics microservices architectures

- Lower tech overhead, high reusability, higher performance

Cons

- Sometimes creates island of siloed software

With these environments and processing styles in mind, organizations can approach optimization of their data science pipelines with a few strategies.

The final decision

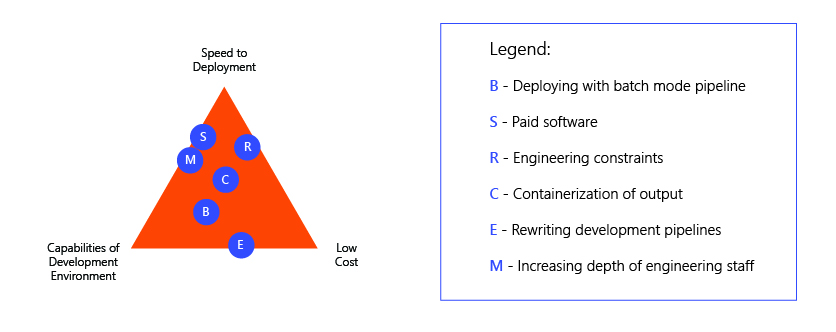

Reducing the speed to deployment of analytics and data science pipelines requires pursuing one of the choices below. As you can see, each has benefits and drawbacks.

1. Deploy with a batch mode pipeline. This will reduce the need for high-performance real-time processing. Cost: Concurrency of data.

2. Minimize the differences between the development and deployment environments. This can be done in a variety of ways:

a. Use paid software. Get software that offers server functionality. Cost: Expensive and sometimes limited

b. Apply engineering constraints. A restricted development environment can speed up deployment. Cost: Speed and agility of your data analyst/data scientist.

c. Containerize output. If output of development pipelines is containerized. Cost: Can create tremendous technical debt and short shelf life of pipelines.

d. Rewrite development pipelines. Changing development pipelines into engineering preferred language/structure. Cost: Speed and accuracy. Often there are big inconsistencies between prototype and re-written pipelines which can cost significant time to troubleshoot.

3. Eliminate lag time. By increasing the depth of engineering staff, lag in deployment time can be reduced. Note that it may require as many as 4 machine learning engineers per data scientist to keep up with the rate of production of models and pipelines. Cost: Labor.

In summary, there are many attractive options for rapid deployment of analytics and data science pipelines. Each has a different place in the triad of “speed, capability, and cost” that should be considered early in the lifecycle. If speed to deployment is essential while managing cost, a restricted deployment environment is ideal. If an organization wishes to balance speed, capability and cost, containerization wins. If cost is no issue, then the very fastest and most capable is either software or a staff of dedicated machine learning engineers.

Logic20/20 has extensive experience in rapid deployment of lower cost solutions, and we can both help you find which critical point is best for your organization as well as implement the solution itself. We have a highly talented staff of data science practice managers, analytics managers, machine learning engineers, developers, and analysts. We have an established history of achieving rapid ROI for our customers using their analytics and data science investments.

Like what you see?

Anne Lifton is a lead data scientist in charge of deployment and development of data science models using Python, Kafka, Docker, PostGreSQL, Bazel, and R for production environments.