Professional worriers, arrested development, and a simple experiment

It’s my humble opinion that data scientists are essentially professional worriers. Don’t believe me? Here are a few examples of thoughts that might run through a typical data scientist’s head on any given day:

How accurate is my model?

What does my sample really say about the population?

How clean is the data that I’m working with?

Did I remember to turn off the stove?

Well, maybe not the last one, but you get the idea.

The other day, a few data scientists and I were stuck on problem that many data scientists face. We had a model that had been running in production for two years, and throughout all that time, and yet we hadn’t been able to improve the model’s performance. We had tried everything. Different architectures, fancy embeddings, and the age-old data science quick fix: adding more data. Our stakeholders were beginning to grow restless. We were beginning to give up hope.

At a certain point, a thought started to circulate among us.

What if the limiting factor was the data quality?

If pop data science was to be believed, the only answer to our problems was more data and more layers. But how important is data quality, really?

I wanted to shed some light on the question, so I decided to set up an experiment. What would happen to a model’s performance if I took its underlying data and added more and more noise?

Garbage in, garbage out?—a simple experiment—and some surprising results!

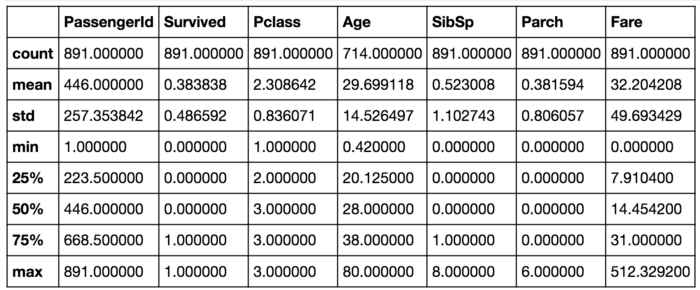

I set up an experiment with the Titanic dataset. The Titanic dataset is considered an entry-level machine learning dataset where passenger profile data (sex, age, ticket class, etc.) is used to predict whether or not a passenger survived their voyage on the Titanic. To mimic data quality issues, I took a random subset of the training data and reversed the outcomes. Passengers that had once perished had now miraculously survived and vice-versa. The goal was to see if adding noise to the data would confuse the model. I tested varying levels of noise and three common models: logistic regression, random forest, and support vector machine.

The Titanic dataset. (Source)

You’ll notice that neural networks are missing from the analysis. This is largely because I think that neural networks are overrated in task that don’t include audio, images, or language. It’s also because neural networks are easy to overfit without large amounts of data present.

My definition of overkill. (Source)

Next, I calculated baseline accuracy using clean data. Then I took the difference between the dirty data and the baseline calculate accuracy loss. I ran each trial 100 times in order to get a better estimation.

How important is data quality?

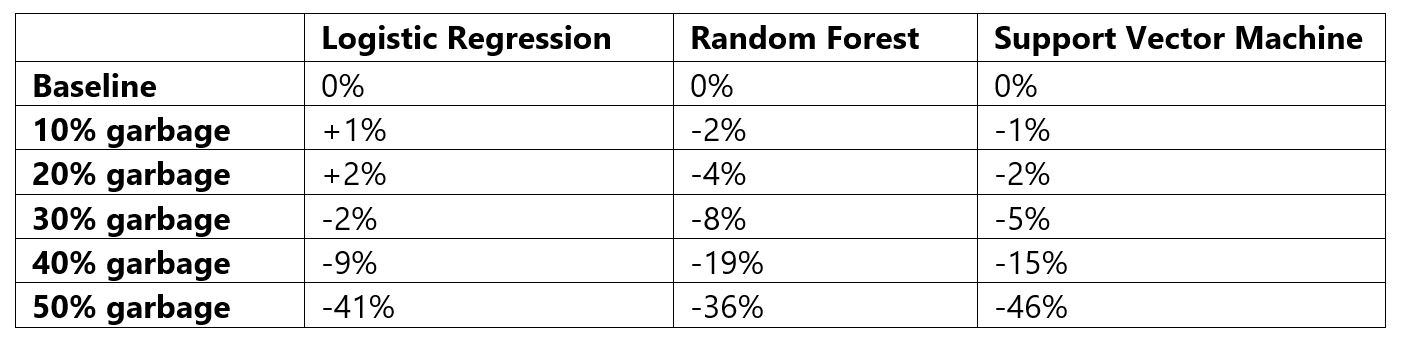

According to the results of this experiment, data quality is not as important as I had originally thought. Even at 20% noise, all three models only experienced moderate accuracy loss. Of course, even small losses in accuracy can translate to drastically different results. In fraud prediction, for example, a small loss in model accuracy could be catastrophic.

Which models tend to thrive in scenarios of poor data quality?

Overall, the logistic regression model was most resistant to data quality issues, losing only 9% accuracy at a 30% noise level. Comparatively, the random forest model lost 19% accuracy, and the support vector machine model lost 15% accuracy. At the same time, all models were relatively robust up until the 20% noise mark. These results should make most data scientists feel pretty optimistic. Maybe it’s time I take back my comments about adding more layers.

So why did logistic regression perform best overall? My guess is it has fewer moving parts, so it’s less likely to fit the noise.

So, what would I do if I had more time to research this question?

First, I would run the experiment with a larger variety of datasets to see if the effects seen above can be replicated.

Next, I would experiment with bias rather than noise. I would expect that bias, such as only reversing the outcomes of male passengers, would have much more pronounced effects on model accuracy. To use a sports analogy, it’s better to have no coach at all than one who actively teaches the wrong technique.

Like what you see?

Peter Baldridge is a Senior Data Scientist at Logic20/20.